Light Transport

Many of my days on this project have been spent doing test renders. For whatever reason, I enjoy the process of rendering the same shot again and again, tweaking one setting each time, and comparing the results.

For anyone getting started, there are many guides for choosing the "ideal" settings for cinematic renders in Unreal Engine. There are links to some guides at the end of this article. My advice is to use the guides as a starting point, and then spend time rendering short clips to see how each setting does (or doesn't) affect the visuals.

A major question for this movie is, do I use deferred rendering or path tracing? Deferred rendering refers to Unreal Engine's main, real-time graphics pipeline. It is what you see in the working viewport using the standard "Lit" mode. But, it is also a rendering option in the Movie Render Queue, and can be adjusted for non-real-time cinematic renders.

Unreal Engine also has a path tracer. At the start, all I knew was that path tracing was slower, but supposedly created more realistic images. Deferred rendering, even when adjusted for cinematic quality, was still much faster. And, I knew that Blue Dot used deferred rendering, not the path tracer. So, my thinking was that I could ignore path tracing, which was a relief, because I already had so much else to learn.

But, something kept nagging me. I knew modern games, and Unreal Engine, used "ray tracing" for real-time graphics. The UE documentation said that deferred rendering was "hybrid raytraced" rendering. What is the difference then, between path tracing and ray tracing?

The answer was not essential to making this movie, but I had to know. So, I took a detour, and in the process became strangely fascinated with path tracing. This is a basic summary of what I learned.

First, a few sources:

This article from Nvidia's blog gives an explanation of ray tracing and path tracing, as well as a brief history of their implementations. The Path to Path-Traced Movies, a paper released by Pixar, has a more detailed history of different rendering methods and how path tracing grew to wide adoption in filmmaking. Physically Based Rendering: From Theory to Implementation fills in more of the history and is a great resource for learning about physics-based rendering methods, namely path tracing.

To answer my original question, ray tracing is a more general term that refers to a variety of methods for modeling light in a scene by "tracing rays." Path tracing is one of those methods. For a layman-friendly, visual demonstration of what it means to "trace rays" for image rendering, here is a video by Walt Disney Animation Studios.

The earliest ray tracing algorithms have been around for roughly 50 years, and path tracing was introduced in 1986. Over the decades, path tracing has emerged as the standard for generating physically accurate images. In order to describe what makes path tracing interesting and sets it apart from other ray tracing methods, it is helpful to illustrate the problem these algorithms are tackling.

When rendering a computer generated image of a real-world scene, complete physical accuracy would require calculating all the light emitted and reflected by all points in the scene that are visible to the camera. But, calculating all the light reflected by a point requires calculating all light sources incoming to that point, which requires calculating the light emitted and reflected by those points, etc. For any scene with real-world complexity, no modern computer (or team of computers) can accomplish this.

In order to render images in a reasonable amount of time (or real time), all ray tracers approximate this physical truth in some way. There are two broad ways of doing this... one is to simplify the calculations by ignoring complex light and surface properties. The other method is to incorporate the more complex properties, but do the calculations for only a small sample of rays and approximate a result.

The first strategy makes intuitive sense. A simpler model of reality is easier to simulate. A renderer could use ray tracing to calculate simpler elements in a scene, like sharp shadows and reflections, and use other, faster (less accurate) technology to handle the more complex interactions, like indirect diffuse illumination. Section 4.3 of the Pixar paper mentioned above describes real-world productions, A Bug's Life and Cars, that used ray tracing in this limited manner.

What if you want to ray-trace the full range of light-surface interactions? Calculating all the rays in a scene is impossible. But, it is possible to calculate a statistical subsample of rays and integrate their results. Interestingly, this actually works. Even with a sample of rays that is miniscule compared to physical reality, this method can produce photorealistic images.

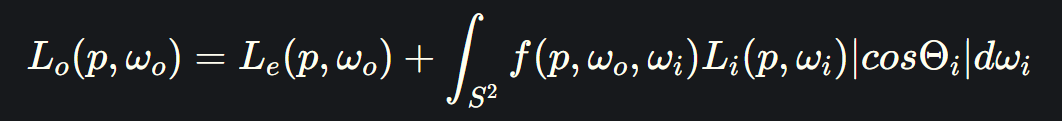

This is what the first path tracer accomplished. James Kajiya's 1986 paper first formalized the rendering equation, now sometimes called the light transport equation, which is a physics-based description of light scattering. Then, he explained how a Monte Carlo algorithm could approximate an answer to the rendering equation by probabilistically deciding which ray paths to trace through the scene, and integrating their results.

This early path tracer did not model all physical light interactions. For example, it was missing subsurface scattering and volumes like fog or smoke. Over the years, these properties have been added to path tracing algorithms and their sampling methods have improved.

So, we have a cohesive, powerful technique for rendering physically accurate images: path tracing. What are we doing with all these other ray tracing algorithms, then?

Through the history of CGI, path tracing has been too slow for many applications. Calculating enough samples to get a clear, noise-free image takes time. Originally, it was too slow even for film productions. Today, most 3D animation and VFX work has transitioned to path tracing, but it is still too slow for real-time rendering. As a result, there has always been a motive to develop faster ray tracers.

According to the Unreal Engine 5.4 Raytracing Guide, published by Nvidia, in UE 4 there were five scene components handled by ray tracing: shadows, translucency, reflections, global illumination (GI), and ambient occlusion. Starting in UE 5, the new system called Lumen incorporated all of these components, except for ray traced shadows. Lumen can use hardware ray tracing with compatible GPUs, or software ray tracing on less powerful hardware.

It is not clear to me how much these different systems overlap, but this document shows how much technology is patched together under the hood to deliver real-time ray tracing.

But, in UE, when I change the viewport mode to Path Tracing, all of that goes away, and it's just the path tracer. In my research, one word I saw repeatedly used to describe path tracing was "elegant." And it is.

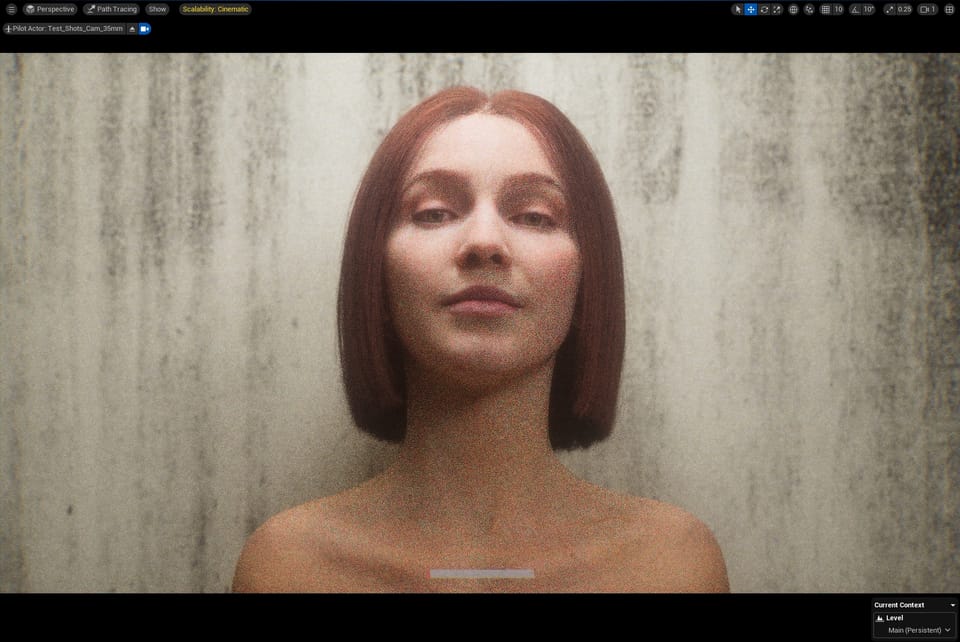

The pixels trickle into view as the samples accumulate... from the stochastic fuzziness, a vision emerges... converging on a perfect, unattainable truth:

My original plan was to use Unreal Engine's real-time framework and try to optimize it for cinematic renders. Blue Dot didn't need the path tracer, so I assumed this movie didn't either. Maybe it doesn't. But, I never predicted that I, personally, would come to need the path tracer.

My test renders are almost all deferred rendering. They do the job fast. In the time it takes deferred rendering to produce a 120-frame clip, path tracing can render about 3 frames. But, those frames are special. Sometimes I'll take a break from the computer to render a full clip with path tracing, and I look forward to the results.

Here are two renders of the same frame from a sequence I was using to play with hair simulations. The first image is deferred rendering tweaked for cinematic quality as best I know how. The second image is the path tracer with 1024 samples.

Well, for one, her hair color changes drastically. Oh, and her clothes are gone. (I don't know why, but I haven't been able to get UE's Chaos Cloth assets to render with the path tracer.) But, aside from that, doesn't it feel like her face comes to life in the second image?

I don't know for sure if the final renders for this movie will be done with the path tracer, but I am gravitating towards it. Either way, I will be using both methods as I learn more.

Ok, that's all for this history lesson and sermon.

As for what buttons to press in Unreal Engine to make your renders look better... there are four main places to adjust visual settings: the project settings menu, post process volumes, the Movie Render Queue settings, and console variables (CVars). Here are some guides:

The Bad Decisions UE 5 Beginner Tutorial has an episode covering the project settings and post process volumes, and another episode covering render queue settings and console variables.

Nvidia's guide for ray tracing in Unreal Engine, mentioned above, gives a more detailed description of the engine's ray tracing settings and what they do.

To dig deeper into UE's Movie Render Queue and get the most out of it, I strongly recommend this page from the Epic Games website. This explains what temporal and spatial samples actually do and the right ways to use them, if/when to use anti-aliasing, warmup frames, and CVars.

A word of warning for CVars: the options available, and their default settings, change frequently with Unreal Engine's updates. Also, most of the important image-quality CVars are covered by UE's scalability settings. Using the Cinematic quality setting might be all you need.

After setting Engine Scalability Settings to Cinematic, if there are CVars you are thinking of adjusting, you can type the variable name plus "?" in the console to get more information, including its current value. If LastSetBy shows "Scalability," that means the scalability settings already adjusted it.

Looking Forward to Real-Time Path Tracing

If you are interested in more technical background information, this video from "Josh's Channel" on YouTube gives a good visual explanation of path tracing and also discusses ReSTIR, which uses an innovative sampling method for the Monte Carlo integration. It is one of the many recent advances moving us towards real-time path tracing.

ReSTIR is gradually being integrated into Unreal Engine, and real-time path tracing (for direct and indirect lighting, but not yet all scene elements) is available on the NvRTX branch of UE. Richard Cowgill did a talk about this at Unreal Fest Seattle: