Why Make a Movie in a Game Engine?

When I wrote the script, I intended to shoot this movie the traditional way. My resources are limited, so I wanted a movie I could shoot in my own apartment, with a couple of actors, over a handful of weekends.

The story takes place in a not-too-distant future where brain-computer interfaces (BCI) are commonplace. Two engineers/programmers live together and work together to build a specialized computer that they use to connect their minds via their BCIs.

It would be grungy Sci-Fi, like Tetsuo: The Iron Man (but not black and white). I would build some kind of cluster computer in a metal rack, with all its hardware and cables exposed, and design a gnarly prosthetic to paste behind the actors' ears.

Tetsuo: The Iron Man (1989)

For over a year, totally confident I would make this movie, I worked on building this rack computer. I gathered old hardware from junk stores and online auctions and pieced the monstrosity together in my free time. It stood in the living room. My wife and I didn't entertain many visitors during this period.

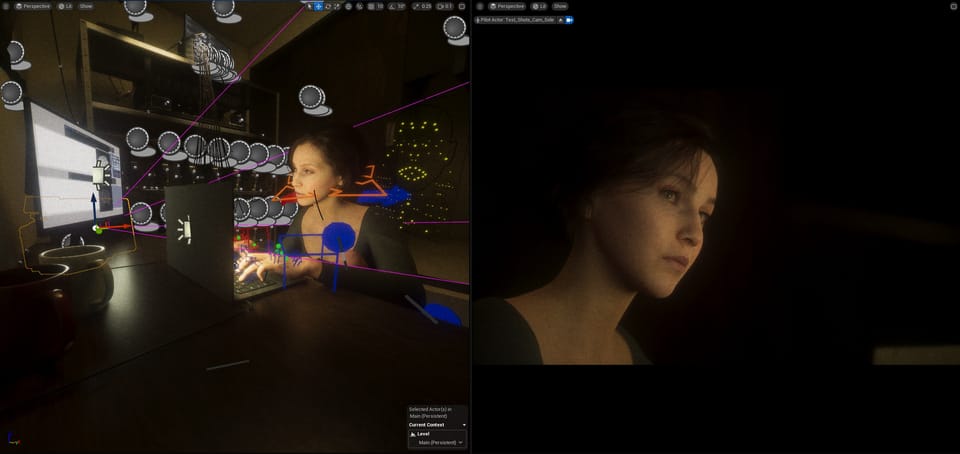

Meanwhile, I decided to do previs in Unreal Engine. Previs for a tiny-scale movie like this one is probably unnecessary. But, I had always been interested in games and game engines, and I saw it as a chance to learn the basics of UE while planning the shots. After measuring the dimensions of our living area, I erected some virtual walls inside a blank level. This is how simple the setup was:

The key for previsualization is that Unreal Engine has virtual cameras that you can calibrate to match real-world cameras. If your virtual set is built to scale, you can place the virtual camera inside it and get the same framing you would get if you placed a real camera in the real location.

This would be useful for planning the shoot in our cramped apartment. I could use the virtual set to determine if the shots I envisioned were physically possible. For example, I quickly learned that the framing I had in mind for a particular overhead shot would require placing the camera above the ceiling. The only way to get this shot would be to lower the camera and use a wider lens, or shoot from an angle. So, I quickly got a sense of what kind of equipment I would need.

I scavenged free 3D assets from the internet to create a bare-bones set, and I used MetaHumans as stand-ins for the actors. There was no particular lighting or animation, I just posed the characters and added basic camera movements. The storyboards, or still frames I exported from UE, looked like this:

At the time, I was intent on making a live-action movie, so my foray into Unreal Engine ended here. The notion of making a movie virtually, in a game engine, was only a distant possibility. "Maybe someday."

But, over the months, as production neared, a shift happened. My interest in UE lingered, and the "maybe someday" idea spread its grasp over my mind. The only thing keeping it at bay was my fondness for working with actors, on top of the fact that I had already poured a healthy portion of my time and budget into the janky, cable-strewn computer rack that defiled our living room. Throwing all of that away would be insane, right?

The truth was, I could see the limits of the movie I was planning to make. Given what I had built so far, and my remaining resources, I had a vague idea of what it would look like. It wasn't good enough. And, I was not in a position to endlessly shoot and experiment until it got good enough. I had enough budget to pay two actors and a cinematographer a meager wage for roughly ten days. Even if they were willing to throw in a few days extra, our time would be limited. My nightmare was to be days into production, looking at dull, amateurish footage, with no feasible way to reshoot it.

Instead, if I used the remaining budget to build a nice computer and dive into virtual production, the possibilities seemed endless.

It would take time... Given how much there was to learn, it would take more time than the production I had originally planned. But, I could work on it any hour of the day, without needing to coordinate around anyone else's schedule. And, I would be learning a range of new skills. Compared to the daunting task of making this movie on the cheap, constricted by physical reality, the prospect of building in the digital realm seemed freeing and invigorating.

But, there was a major question: is it possible?

The biggest challenge in making this movie will be animating believable human performances. My sense is that faces are the most challenging, and bodies are only slightly less challenging than faces.

Skilled animators, in 2D and 3D, can tell stories and convey powerful emotion, but they rarely aim for photorealism. I had a stylistic vision for the live-action movie, and I want to preserve that as much as possible. That means I want the audience to feel they are looking at a real human.

Is that possible, given the tools available to a solo creator? Based on what others have been able to achieve, the short answer appears to be: probably not. Or, more optimistically, not yet. The tools to make this a reality are looming on the horizon.

In trying to answer this question, I searched for examples of both studio and independent work.

Many 3D artists have already been, for years, creating photorealistic still images of humans. Two examples are Ian Spriggs and Şefki Ibrahim. Here is a portrait Şefki Ibrahim worked on, of Pedro Pascal as Joel from The Last of Us, rendered in Unreal Engine:

These results are amazing, but, these faces don't move.

In terms of human "digital doubles" that can deliver a performance, unsurprisingly, the best results are in major Hollywood productions. I found one exception: Blue Dot.

Blue Dot was made by 3Lateral using MetaHumans and Unreal Engine. It was probably the largest factor in my decision to take the leap into virtual production, and why I chose UE over other tools. Unreal Engine and MetaHumans are available for free to hobbyists or micro-budget productions (details here). If they weren't, I would not be attempting this project.

According to this talk given by Đorđe Vidović and Adam Kovač, Blue Dot was rendered completely from the game engine, with no compositing or post-production required. I wouldn't say the movie is 100% photorealistic. But, more importantly, it feels human. It never veers into the uncanny. Knowing this is possible in engine is very motivating.

Unfortunately, I don't have access to much of the technology the 3Lateral team used to customize the MetaHuman seen in Blue Dot. They used a process similar to those used in large-budget films, which involved photographing the actor's face from a variety of angles, in a variety of lighting conditions, with a variety of facial expressions. This data was used to create a 3D mesh of the actor's face, in this case Radivoje Bukvić, that matched his likeness as closely as possible. Then, Bukvić's performance is captured with a head-mounted camera, and that motion capture data animates the MetaHuman rig, apparently with minimal tweaking required.

They describe this process in more detail in the same talk I linked above. Here is another example of this workflow, implemented by Image Engine for the movie Logan (2017):

I don't have access to that kind of scanning equipment (or an actor to scan, for that matter). Any customizations I do on my MetaHumans will be manual, rather than data-driven. So, I am back to the original question: is it possible? I believe the production for Blue Dot took a few months total. If I spent a year (two?), could I achieve similar results?

Whatever the outcome, my gut tells me it will be a worthwhile journey. With the tech available to me, photorealism may be impossible. But, maybe I will discover a stylistic trick that gets compelling results. Or, maybe I will be rescued by the release of another innovative tool. The recent energy and development in this space is encouraging.